Tag: nlp applications

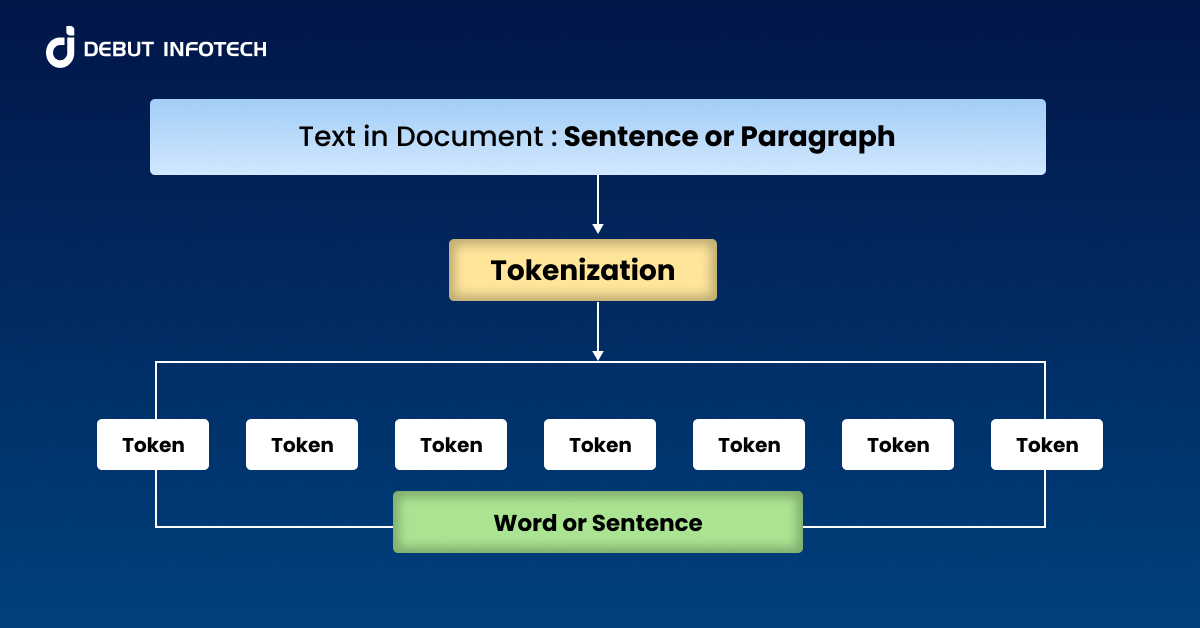

NLP Tokenization is a foundational step in natural language processing that involves breaking text into smaller, manageable units called tokens. These tokens—whether words, characters, or…